What is Mistral Large?

Mistral Large has top-tier reasoning capacities, is multi-lingual by design, has native function calling capacities and a 32k model. The pre-trained model has 81.2% accuracy on MMLU.

Problem

Users require advanced language models for various applications but face issues with high costs and limited capabilities in terms of context window size and multilingual support.

Solution

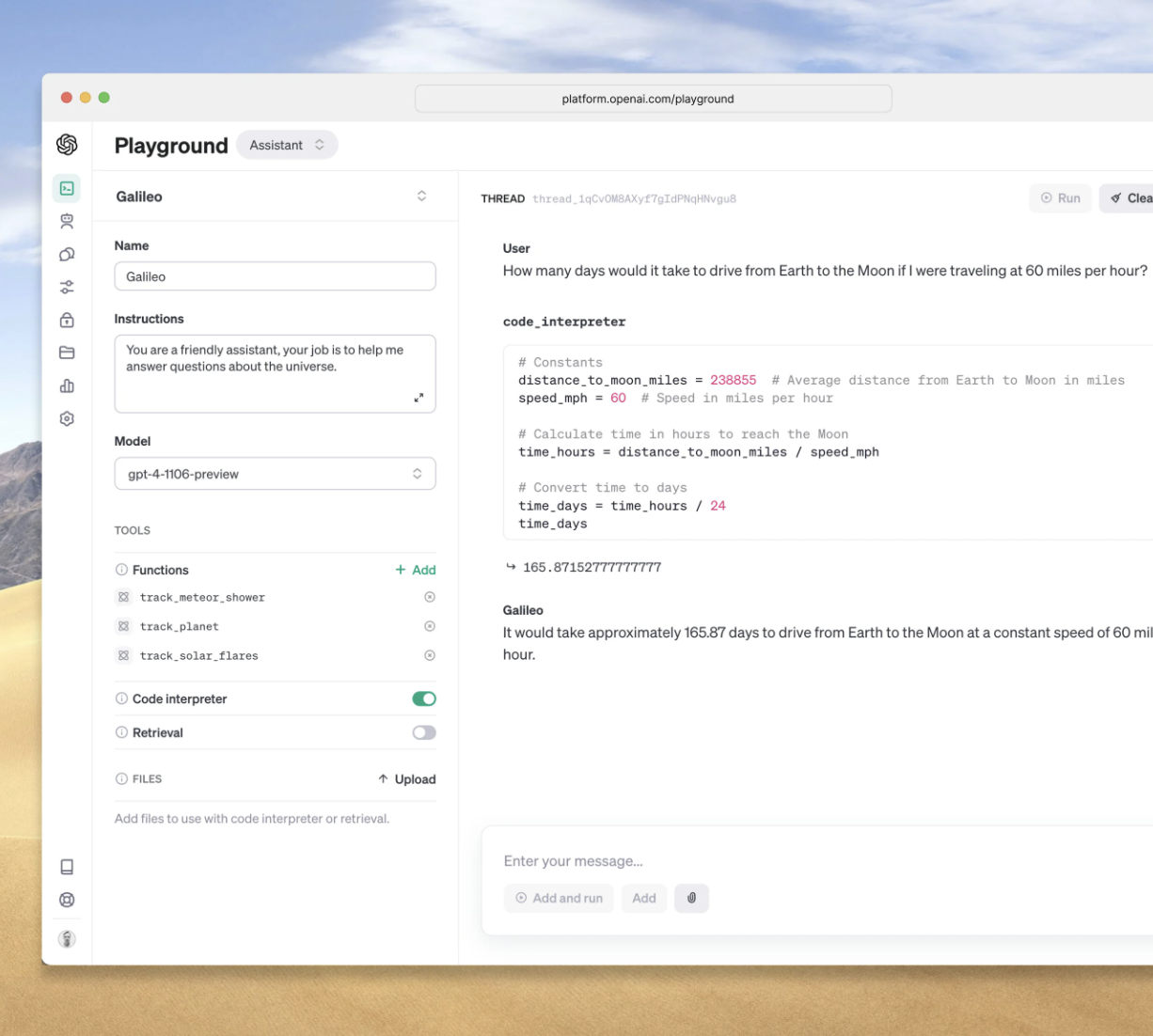

Mistral Large is a language model with top-tier reasoning capacities, multi-lingual design, native function calling capacities, and a large 32k token context window. It serves as a cheaper alternative to GPT-4, offering 81.2% accuracy on MMLU.

Customers

Data scientists, AI researchers, developers, and companies requiring advanced AI text processing and generation capabilities for creating applications, conducting research, or enhancing existing systems.

Unique Features

Top-tier reasoning capacities, multi-lingual support, native function calling capacities, large 32k token context window, and 81.2% accuracy on MMLU make Mistral Large a powerful and versatile language model.

User Comments

It's cost-effective compared to other models.

Impressive context window size for better understanding and generation.

Multi-lingual support is a game-changer for global applications.

High accuracy makes it reliable for critical tasks.

Function calling capacities enhance its utility in complex applications.

Traction

Unable to provide specific quantitative traction without direct access to up-to-date resources.

Market Size

Unable to provide a specific market size for Mistral Large without comparable market data. Language model market is rapidly growing with significant investments in AI and machine learning technologies.